By Kel Hahn

It is the summer of 2009. Ruigang Yang is enjoying the three month respite between semesters as a visiting professor at Microsoft. One day, technicians familiar with Yang’s papers on computer vision, computer graphics, image processing and multimedia burst into his office. They want his opinion on a project they have in development and the way they comport themselves tells Yang it’s something big. As they talk, he realizes they are pitching a new kind of motion tracking device—one that relies on a single low-cost camera. The device will be called the “Kinect.” Microsoft will sell 8 million units during its first 60 days on the market. As the fall semester commenced, Yang pondered the Kinect’s possibilities. Microsoft had developed it as a way to enhance their gaming experience; but what else could such a device do? Could the technology be employed for purposes unrelated to gaming and what would need to be different? “I shared this idea with my students: these interesting new low-cost cameras—what can we do with them?” Yang recounts. “One of my students got a motion tracking algorithm running and I started looking for collaborators across campus interested in the idea of using a single camera to track motion.”

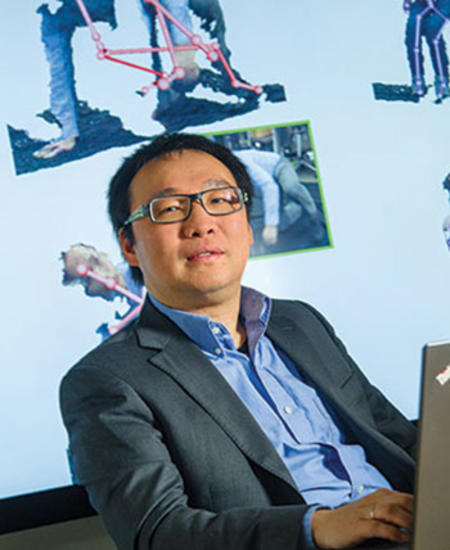

That is how Yang began an ongoing project with Brian Noehren, associate professor of physical therapy in the College of Health Sciences, and Robert Shapiro, professor of kinesiology in the College of Education with a joint appointment in the Department of Biomedical Engineering. Since 2012, the team has endeavored to create a low-cost, high-accuracy motion tracking device that physicians and physical therapists can use in their office, in rural areas, at injury sites—virtually anywhere. In particular, Yang says, they want to enable physical therapists to determine the rehabilitation effectiveness of anterior cruciate ligament injuries.

“Right now physical therapists don’t really have a way to quantify outcomes for their patients. If we can give them a low-cost device that lets them measure and say, ‘Okay, last time you could bend your leg only five degrees, but today’s measurement shows you can bend it 10 degrees,’ then they can demonstrate quantifiable improvement. That is important for their work with patients, but also gives them something to show insurance companies that are moving toward outcome based reimbursement models.”

Relying on Noehren and Shapiro’s expertise, the team has identified 72 distinct markers on the human body that signify joints for motion tracking. When an individual is scanned, the 72 points are plotted automatically, giving the team an anatomically correct map of his or her body; and because timing is everything, the software operates at 10-15 frames per second which generates a whole 3-D motion model in seconds.

“What we have built is a low-cost motion tracking capture system that works in real time,” Yang says. “Our algorithm is the best in the published literature and the device is more accurate than if we were to use the tracking software provided by Microsoft for the same purpose. The feedback has been very positive.”

Although Yang is a full professor at UK, has won a National Science Foundation CAREER Award and his research was cited over 1,700 times just last year, he has never developed a product for the market. His extensive contributions to the field of 3-D modeling and sensing, which are often consulted by product developers, are mostly found in technical papers. But thanks to Noehren, Shapiro and funding through the NSF, their motion tracking device is closer to becoming a reality. “This collaboration has been quite different from writing a paper,” Yang laughs. “I have done a number of research projects and published papers in some of the best journals, but this is the first time I have tried to make a product that would potentially be used by hundreds of thousands of people. It is a new and exciting venture for me.”